HTCondorDebug

Why is my job still in queue ?

To see what is happening and why your jobs are not starting, use:

> condor_q -better-analyze 2993001.0

-- Schedd: schedd03.wn.iihe.ac.be : <192.168.10.147:9618?...

The Requirements expression for job 2993001.000 is

(TARGET.Arch == "X86_64") && (TARGET.OpSys == "LINUX") && (TARGET.Disk >= RequestDisk) && (TARGET.Memory >= RequestMemory) && (TARGET.HasFileTransfer)

Job 2993001.000 defines the following attributes:

DiskUsage = 1

ImageSize = 1

RequestDisk = DiskUsage

RequestMemory = ifthenelse(MemoryUsage =!= undefined,MemoryUsage,(ImageSize + 1023) / 1024)

The Requirements expression for job 2993001.000 reduces to these conditions:

Slots

Step Matched Condition

---- -------- ---------

[0] 4547 TARGET.Arch == "X86_64"

[1] 4547 TARGET.OpSys == "LINUX"

[3] 4547 TARGET.Disk >= RequestDisk

[5] 3017 TARGET.Memory >= RequestMemory

2993001.000: Job is running.

Last successful match: Wed Jan 3 14:46:54 2024

2993001.000: Run analysis summary ignoring user priority. Of 152 machines,

0 are rejected by your job's requirements

0 reject your job because of their own requirements

0 match and are already running your jobs

0 match but are serving other users

152 are able to run your job

There are 2 important sections here:

- the reduced requirements expression:

- You can see each requirements, and how many job slots are ok with your requirement. If for one requirement you have 0 matched slots then this one is causing the issue and preventing your jobs to run. It probably does not exist. Contact us if you are not sure.

- the run analysis summary:

- you have a summary of how many machines could accept your job. Again, if it states no machine is able to run your job, that should be confirmed with how many slots match each requirement.

What is happening to my running Jobs ?

To help answer this, try connecting to where your job is with:

condor_ssh_to_job 2993001.0

Then first look at what your jobs are doing using htop.

To see only your jobs, press u (for users), then select your username from the left, finally enter Tree Mode with F5

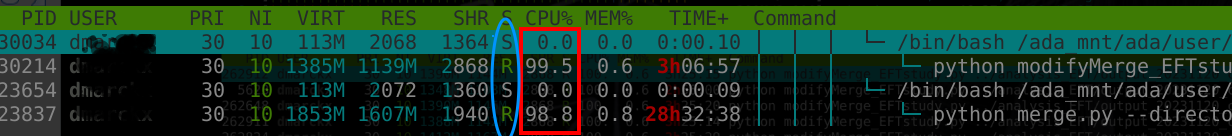

It should look something like this:

- Look at the CPU% column [% of utilization of 1 core, can > 100%] (in red), you should see some processes which are using the CPU (ideally close to 100%)

- The Status column show the status of the process (in blue)[see here for meaning of letters]. Ideally it should be S with a subprocess in Running. If it's in D or worse Zombie state, then you have a problem. D state usually means it is waiting on a storage, maybe to come back online, and just sleeps and cannot be interrupted. Zombies have no parent process anymore and are impossible to kill (as we all well know ;) ). See if there is an announcement about storage services having issues, then either wait for them to come back (your process should resume), or contact us.

Look at what your process is accessing with lsof

lsof -p 23837

using the PID of the process (first numbers of the htop line, see above). You can remove some lines to see better, like cvmfs usr /usr/lib64:

lsof -p 23837 | grep -v -e cvmfs -e /usr/lib64

OK, none of this helped ...

Then please contact us, with as much information as you can. A summary of the results from what you already investigated will help us a lot. Also, please give concrete example so that we can directly investigate, and mention the machines your are working on.